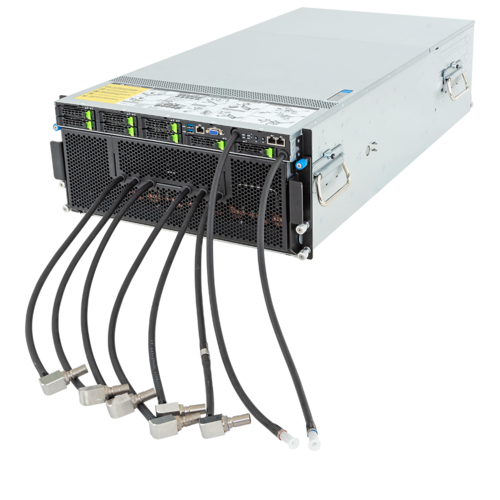

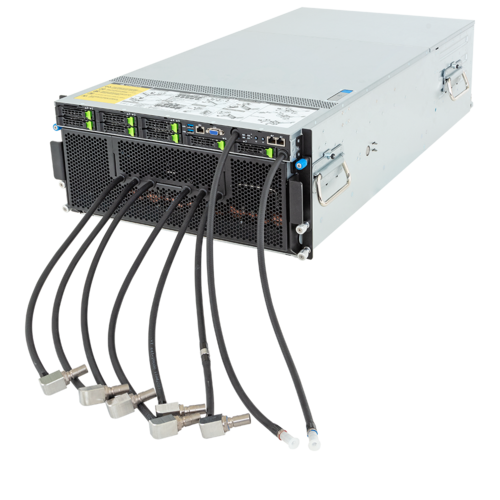

Scalable, Turnkey AI Supercomputing Solution

Unleash a Turnkey AI Data Center with High Throughput and an Incredible Level of Compute

One of the most important considerations when planning a new AI data center is the selection of hardware, and in this AI era, many companies see the choice of the GPU/Accelerator as the foundation. Each of GIGABYTE’s industry leading GPU partners (AMD, Intel, and NVIDIA) has innovated uniquely advanced products built by a team of visionary and passionate researchers and engineers, and as each team is unique, each new generational GPU technology has advances that make it ideal for particular customers and applications. This consideration of which GPU to build from is mostly based on factors: performance (AI training or inference), cost, availability, ecosystems, scalability, efficiency, and more. The decision isn’t easy, but GIGABYTE aims to provide choices, customization options, and the know-how to create ideal data centers to tackle the demand and increasing parameters in AI/ML models.

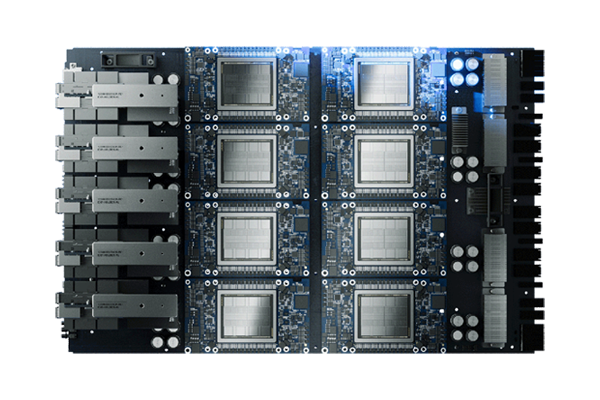

NVIDIA HGX™ H200/B100/B200

Biggest AI Software Ecosystem

Fastest GPU-to-GPU Interconnect

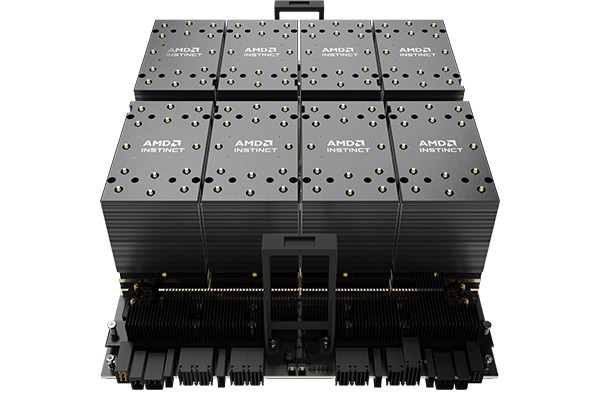

AMD Instinct™ MI300X

Largest & Fastest Memory

Intel® Gaudi® 3

Excellence in AI Inference

Why is GIGAPOD the rack scale service to deploy?

-

Industry Connections

GIGABYTE works closely with technology partners - AMD, Intel, and NVIDIA - to ensure a fast response to customers requirements and timelines.

-

Depth in Portfolio

GIGABYTE servers (GPU, Compute, Storage, & High-density) have numerous SKUs that are tailored for all imaginable enterprise applications.

-

Scale Up or Out

A turnkey high-performing data center has to be built with expansion in mind so new nodes or processors can effectively become integrated.

-

High Performance

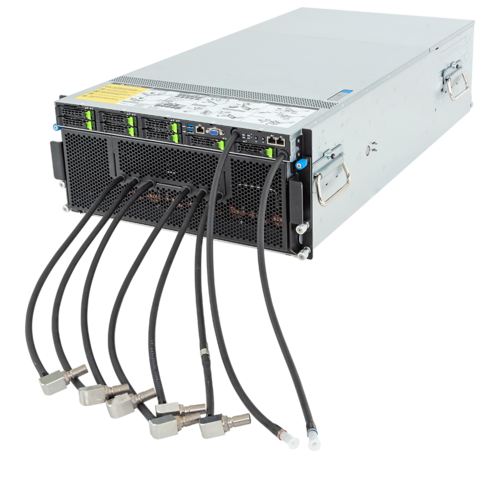

From a single GPU server to a cluster, GIGABYTE has tailored its server and rack design to guarantee peak performance with optional liquid cooling.

-

Experienced

GIGABYTE has successfully deployed large GPU clusters and is ready to discuss the process and provide a timeline that fulfills customers requirements.

The Future of AI Computing in Data Centers

GIGABYTE enterprise products not only excel at reliability, availability, and serviceability. They also shine in flexibility, whether it be the choice of GPU, rack dimensions, or cooling method and more. GIGABYTE is familiar with every imaginable type of IT infrastructure, hardware, and scale of data center. Many GIGABYTE customers decide on the rack configuration based on how much power their facility can provide to the IT hardware, as well as considering how much floor space is available. So, this is why the service, GIGAPOD, came to be. Customers have choices. Starting with how the components are cooled and how the heat is removed, customers can select either traditional air-cooling or direct liquid cooling (DLC).

1 2| Ver. | GPUs Supported | GPU Server (Form Factor) |

GPU Servers per Rack |

Rack | Power Consumption per Rack |

RDHx | |

|---|---|---|---|---|---|---|---|

| 1 |  |

NVIDIA HGX™ H100/H200/B100 AMD Instinct™ MI300X |

5U | 4 | 9 x 42U | 50kW | No |

| 2 |  |

NVIDIA HGX™ H100/H200/B100 AMD Instinct™ MI300X |

5U | 4 | 9 x 48U | 50kW | Yes |

| 3 |  |

NVIDIA HGX™ H100/H200/B100 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | Yes |

| 4 |  |

NVIDIA HGX™ B200 | 8U | 4 | 9 x 42U | 130kW | No |

| 5 |  |

NVIDIA HGX™ B200 | 8U | 4 | 9 x 48U | 130kW | No |

| Ver. | GPUs Supported | GPU Server (Form Factor) |

GPU Servers per Rack |

Rack | Power Consumption per Rack |

CDU | |

|---|---|---|---|---|---|---|---|

| 1 |  |

NVIDIA HGX™ H100/H200/B100 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | In-rack |

| 2 |  |

NVIDIA HGX™ H100/H200/B100 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | External |

| 3 |  |

NVIDIA HGX™ B200/H200 | 4U | 8 | 5 x 42U | 130kW | In-rack |

| 4 |  |

NVIDIA HGX™ B200/H200 | 4U | 8 | 5 x 42U | 130kW | External |

| 5 |  |

NVIDIA HGX™ B200/H200 | 4U | 8 | 5 x 48U | 130kW | In-rack |

| 6 |  |

NVIDIA HGX™ B200/H200 | 4U | 8 | 5 x 48U | 130kW | External |

Applications for GPU Clusters

-

Large Language Models (LLM)

Training models that use billions of parameters while having sufficient HBM/memory is a challenge. And text-based data, as in LLM, thrives with a GPU cluster that has a single scalable unit with over 20 TB of GPU memory, making it ideal in scale.

-

Science & Engineering

Research in fields such as physics, chemistry, geology, and biology greatly benefit with the use of GPU accelerated clusters. Simulations and modeling thrive with the parallel processing capability of GPUs.

-

Generative AI

Generative AI algorithms can create synthetic data that is used in training AI and it can help automate industrial tasks. This is all possible by a GPU cluster using powerful GPUs with fast Infiniband networking.

Related Products

-

HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 5U DP NVIDIA HGX™ H200 8-GPU | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - AMD EPYC™ 9004 - 5U DP NVIDIA HGX™ H200 8-GPU | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 5U DP NVIDIA HGX™ H200 8-GPU DLC | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - AMD EPYC™ 9004 - 5U DP NVIDIA HGX™ H200 8-GPU DLC | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 5U DP NVIDIA HGX™ H100 8-GPU 4-Root Port DLC | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - AMD EPYC™ 9004 - 5U DP NVIDIA HGX™ H100 8-GPU 4-Root Port DLC | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - 5th/4th Gen Intel® Xeon® - 5U DP AMD Instinct™ MI300X 8-GPU | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - AMD EPYC™ 9004 - 5U DP AMD Instinct™ MI300X 8-GPU | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 5U DP NVIDIA HGX™ H100 8-GPU 4-Root Port (BF-3) | Application: AI , AI Training , AI Inference & HPC

-

HPC/AI Server - AMD EPYC™ 9004 - 5U DP NVIDIA HGX™ H100 8-GPU 4-Root Port | Application: AI , AI Training , AI Inference & HPC

Strong Top to Bottom Ecosystem for Success

Resources