AMD Instinct™ MI300 Series Platform

- Frontier is the #1 supercomputer in the TOP500, and achieving this goal relies on AMD EPYC™ processors and AMD Instinct™ GPUs using the AMD ROCm™ software platform. Even with the best processors in the market, the AMD EPYC™ 9004 series CPUs require GPU acceleration for HPC, AI training and inference, and data intensive workloads.

- A Data Center APU and a Discrete GPU

GIGABYTE has created and tailored powerful, passive cooling servers for the AMD Instinct™ MI300 Series accelerators, and this includes the OAM form factor like its predecessor, the MI250 GPU. The new AMD Instinct MI300 series accelerators have been engineered for two platforms, the MI300X GPU that is an OAM module housed in GIGABYTE 5U G593 Series servers, and the MI300A APU that comes in a LGA socketed design with four sockets in the GIGABYTE G383 Series. - In the upcoming, and likely new #1 supercomputer, El Capitan exclusively deploys the MI300A APU architecture that has a chiplet design where the AMD Zen4 CPUs and AMD CDNA™3 GPUs share unified memory. This means that the technology is not only able to scale for large computing clusters, but also to support smaller deployments such as a single HPC server.

The AMD Instinct™ MI300 Series, comprising the MI300A and MI300X accelerators, is designed to boost AI and high-performance computing (HPC) capabilities in a compact, efficient package. The MI300A, an accelerated processing unit (APU) for each server socket, helps enable efficiency and density by integrating GPU, CPU, and high-bandwidth memory (HBM3) on a single package. The MI300X offers raw acceleration power with eight GPUs per node on a standard platform.

AMD Instinct MI300 Series accelerators aim to improve data center efficiencies, tackle budget and sustainability concerns, and provide a highly programmable GPU software platform. It features advanced GPUs for generative AI and HPC, high-throughput AMD CDNA 3 GPU CUs, and native hardware sparse matrix support. By enhancing computational throughput and simplifying programming and deployment, the MI300 Series addresses the escalating demand for AI and accelerated HPC amidst resource, complexity, speed, and architecture challenges. The AMD Instinct MI300 Series is Ready to Deploy.

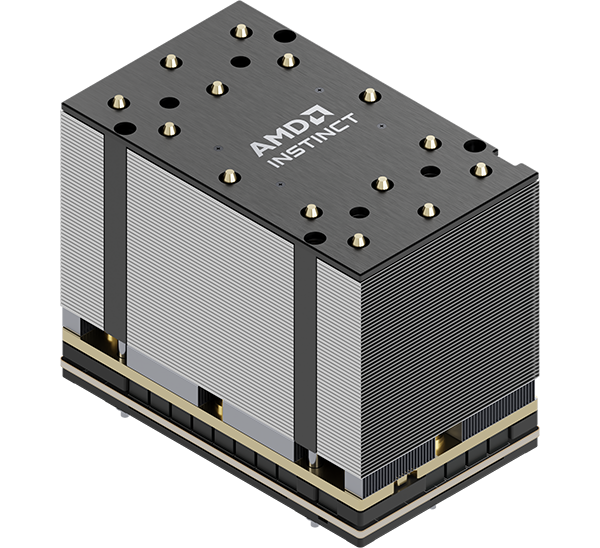

AMD Instinct™ MI300A APU

AMD Instinct™ MI300A APU

| Model | MI300A APU |

|---|---|

| Form Factor | APU SH5 socket |

| AMD ‘Zen 4’ CPU cores | 24 |

| GPU Compute Units | 228 |

| Stream Processors | 14,592 |

| Peak FP64/FP32 Matrix* | 122.6 TFLOPS |

| Peak FP64/FP32 Vector* | 61.3/122.6 TFLOPS |

| Peak FP16/BF16* | 980.6 TFLOPS |

| Peak FP8* | 1961.2 TFLOPS |

| Memory Capacity | 128 GB HBM3 |

| Memory Clock | 5.2 GT/s |

| Memory Bandwidth | 5.3 TB/s |

| Bus Interface | PCIe Gen5 x16 |

| Scale-up Infinity Fabric™ Links | 4 |

| Maximum TDP | 550W (air & liquid) & 760W (water) |

| Virtualization | Up to 3 partitions |

* Indicates not with sparsity

AMD Instinct™ MI300X GPU

AMD Instinct™ MI300X GPU

| Model | MI300X GPU | Performance compared to MI250 |

|---|---|---|

| Form Factor | OAM module | |

| GPU Compute Units | 304 | Up 46% |

| Stream Processors | 19,456 | Up 46% |

| Peak FP64/FP32 Matrix* | 163.4 TFLOPS | Up 81% |

| Peak FP64/FP32 Vector* | 81.7/163.4 TFLOPS | Up 80% & 261% |

| Peak FP16/BF16* | 1307.4 TFLOPS | Up 261% |

| Peak FP8* | 2614.9 TFLOPS | |

| Memory Capacity | Up to 192 GB HBM3 | Up 50% |

| Memory Bandwidth | 5.3 TB/s | Up 62% |

| Bus Interface | PCIe Gen 5 x16 | |

| Infinity Fabric™ Links | 7 | |

| Maximum TBP | 750W | |

| Virtualization | Up to 8 partitions | |

* Indicates not with sparsity